The age-old wireless networking problem of optimizing Wi-Fi in a constantly changing radio frequency (RF) environment, or what the industry calls Radio Resource Management (RRM), is a perfect use case for artificial intelligence and machine learning. These technologies can provide enormous value to RRM today, while getting us closer to the ultimate goal of deploying self-driving networks.

Based on current trends, it is clear that IT is going to be increasingly challenged to support the soaring numbers of mobile and IoT devices, with many reports predicting over 6 billion mobile devices and over 20 Billion IoT devices connecting by 2020. Companies can’t afford to hire and train enough helpdesk and networking experts to support this astronomical growth. Data science, artificial intelligence and machine learning will become critical tools to accommodate this scale, first by augmenting helpdesk staff with virtual network assistants and then with a self-optimizing network that will identify issues and automatically resolve them so that IT teams can focus on strategic projects rather than day-to-day reactive troubleshooting.

Radio Resource Management

Why has RRM been such a challenge to date? It stems from the fact that most RRM algorithms lack the ability to learn which of the Wi-Fi channels are likely to experience Dynamic Frequency Selection (DFS) radar interference, which is then used to determine which channels wireless Access Points (AP) should operate. Today, it is fairly common for RRM to make poor decisions because the interference and channel data analyzed are taken during the night when the network is idle. This does not always lead to optimal decisions when users show up the next day, resulting in sub-optimal experiences during real-world usage. Without a feedback loop to correct inaccurate decisions and the ability to continuously optimize performance based on real data, the benefits of RRM will never be realized.

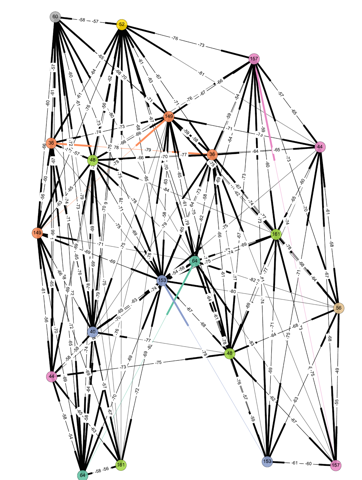

To get a sense of the complexity, the graph below depicts a sample channel allocation for a Wi-Fi network where the dots are the AP’s (with the allocated channel). The lines depict the level of interference of neighbor AP’s (signal strength in RSSI where larger numbers indicate higher interference). As you can see the graph has a high level of connectivity which creates a challenge for optimal channel allocation, especially when you also factor in external influences such as non-Wi-Fi interference or other tenants in your building who have their own Wi-Fi network that is out of your control.

To get a sense of the complexity, the graph below depicts a sample channel allocation for a Wi-Fi network where the dots are the AP’s (with the allocated channel). The lines depict the level of interference of neighbor AP’s (signal strength in RSSI where larger numbers indicate higher interference). As you can see the graph has a high level of connectivity which creates a challenge for optimal channel allocation, especially when you also factor in external influences such as non-Wi-Fi interference or other tenants in your building who have their own Wi-Fi network that is out of your control.

Reinforcement Learning

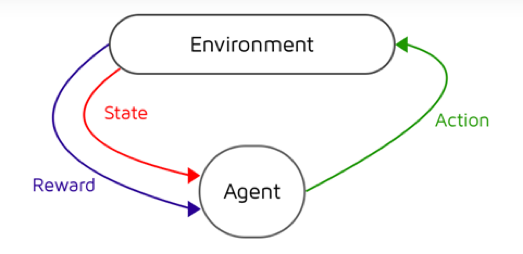

AI can be leveraged with RRM to deliver better user experiences (and overall operational efficiency). One of the core elements for this to occur is called “reinforcement learning,” which works on the principle that an agent takes an action which is either penalized or rewarded based on the result in order to reinforce the optimal behavior. This is similar to how toddlers and robots learn how to walk, whereby the penalty of falling drives proper behavior — e.g. smaller steps and attention to balance. Figure 2 shows the core elements required for reinforcement learning — action, state and reward.

Figure 2: Reinforcement Learning

In a Wi-Fi network, the action would be a change in the channel and/or transmit power. The state is determined by key domain classifiers that measure Service Level Expectations (SLE), like capacity, coverage and DFS radar event occurrences. The reward would be an improvement or degradation in the capacity and/or coverage. The system should ultimately optimize the SLEs and, thus, user experience over time as its learning is reinforced to select the optimal actions.

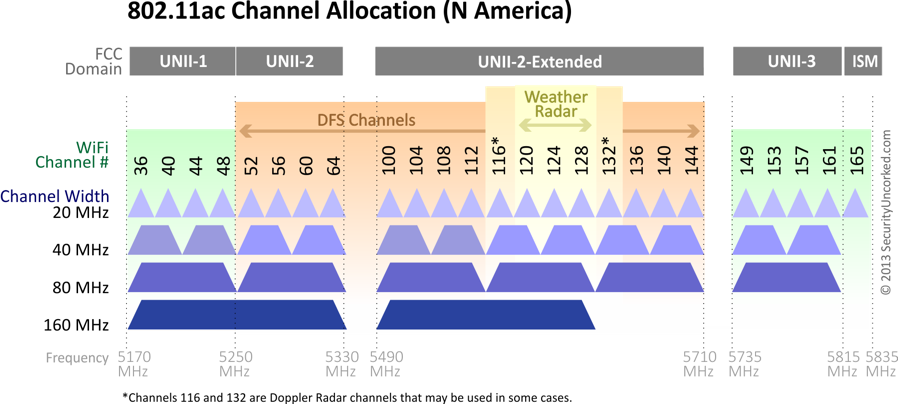

For example, the system should learn which Wi-Fi channels overlap public radar frequencies and are subject to be dynamically disabled. It should then lower the selection weight of these channels to minimize DFS outages that could impact user experience. The diagram below shows the North American channels in the 5GHz band where the range of DFS channels are subject to potential radar events.

5 GHz Channels Available for WiFi

In addition, the system should start to learn the temporal behavior of non-Wi-Fi interference across a location and avoid it. For example, learning that there will be microwave interference in the cafeteria during lunch hours lets the system select channels that do not overlap during these times.

What Does It Take to Implement AI-Driven RRM?

For the above to be effective, a large volume of data is required. One needs to collect data continuously over 24-hour windows so that the history is not lost when the channel optimizations are performed. This is where the power of the modern cloud with elastic compute and storage scale proves to be an enormous benefit.

In addition, the ability to classify this data into service level agreements that baseline performance both before and after the system has activated a new RF plan is required. This is the only way to receive an ongoing feedback loop to optimize results over time.

In addition, reference learning should be combined with anomaly detection to detect problems instantaneously, and continuously adapt over time to changing conditions to deliver the best end-user experience.

The ultimate promise of AI-driven RRM is very appealing — i.e. a self-driving network that replaces complex, data-intensive tasks with automated insight and action using machine learning. The result is a better user experience, coupled with enhanced operation efficiency as IT can then focus on strategic business objectives instead of reactive mundane tasks. While this has been the promise of RRM for over a decade, with new AI-driven platforms, it has finally become a reality.